Introduction to Dummy Cache

Below, you can find an article that introduces our new project – Dummy PHP Cache. Its primary goal is to increase the default PHP productivity, and as a result provide an influence on Magento, enhancing its current ecommerce performance. We will describe features and functionality of the tool in a separate post, so don’t miss the updates!

Table of contents

Meet PHP – The Good, the Bad and the Ugly (and Very Popular)

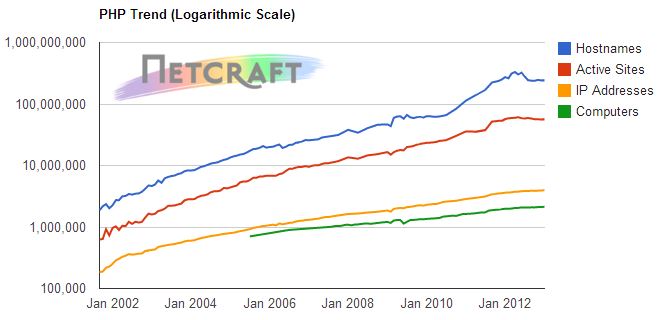

PHP is the most popular programming language used in web development: 83% of all websites are based on it. And despite lots of predictions of the previous 20 years the number of PHP-based websites is constantly growing. Of course, the pace was quite slow over past years, but this can be explained by the already huge market share.

PHP has a relatively developed backward compatibility: the latest version is just two years old, but it is already utilized by 7% of the total number of websites. Despite the poor design, unobvious behavior, the hardest and pretty messy debugging, the abundance of pitfalls –

PHP is still the main language for web development on the planet and as long as there is no reason to change the current market situation, you can discuss RoR / Django as much as you like, or talk about fashionable Node.js as well as look at ASP.NET that appeared right from 2000, but the fact remains.

These innate PHP bottlenecks, caused by the natural evolution of the language and its roots, lead to various typical problems that appear in case of any complex application: everything works extremely slowly. Or while there is a fresh and clean framework, everything works fast and well. But when you link the framework with business logic and start downloading the working data, even servers of the latest generation, with a huge amount of RAM and a fast disk subsystem that is fully enclosed in the file cache in RAM, suffer performance issues and begin to slow down dramatically.

It happens quite rarely that real PHP bottlenecks are really worked out and a full caching system is created at all application levels when designing the architecture of a future CMS. The caching system that lacks a major cache problem – an incorrectly working automatic disability of obsolete records – is created very rarely. Or there is even a worse problem related to caching incorrect data when the app becomes inoperable, so you should clean up caches at all levels, after which the application starts working under stress. A worst-case scenario can be illustrated by the following example. During an evening peak of visitors, there is a danger of providing every second request with a 503 error after cache was cleaned up, consequently, losing visitors, orders, and, of course, money.

The convenience of development and the requirements for the release speed impose their imprint – developers, who don’t understand the details of how the selected or built-in ORM works, generate code which performs huge, ugly, and extremely inefficient SQL queries.

As a result, the maintenance of simple GET queries runs hundreds or even thousands of blocking kernel calls and hundreds of complex queries to the database even in various production modes with warmed-up caches of all levels. Consequently, the application is slow and overloads servers.

Diving Down the Rabbit Hole

We have been working with PHP for more than ten years. During that period, we saw a lot of self-written CMS where UNION JOIN is executed more than a hundred times in a cycle on unindexed neighboring fields. And there were dozens of popular overloaded CMS and frameworks with third-party extensions written in a terrible style, when one unsuccessful (or successful for the malicious user) request outputs the server from the service and requires hardware reset, or when the shell is loaded with elevation on a poorly configured web server and the attacker manages to rebuild the web server with his patches without any obstacles.

What should we do in any such case? Needless to say, we should view logs trying to figure out what led to the infection or the failure, learn tracebacks, duplicate some of users’ requests to the test hosting with the debugger and profiler connected, manually parse hundreds of gigabytes of collected profiles – and everything else in order to find a bug in the framework core that is bound with an equivalence check operator when returning a value from the C API function, SELECT * in a cycle with LIMIT 14, 100 on a table with a million entries, etc.

Of course, if you are a developer, you have your own collection of stories when a painful debugging that took a week led to a formal error with a wrong sign, an unchecked condition, to the thoughtless use of resources.

It seems that the problem is caused by developers who make childish mistakes due to fatigue and inattention. But if you look at the problem from the outside, it becomes obvious that it is solely with the language literally insists on a bad style and requires verbosity, complex branching with an indicator of cyclomatic complexity > 30, overengineering, complicated and often unnecessary system of the non-obvious classes hierarchy, etc. It is virtually impossible to write another code, because it is too expensive, and the developer becomes a hostage of deadlines. But we remember that PHP will remain a workhorse of the entire Internet for a long period of time.

Magento – A Wired PHP Beast

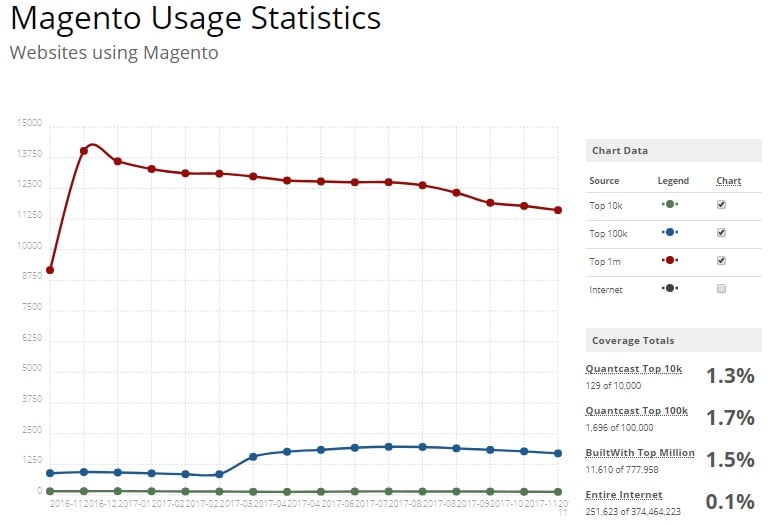

We spent more than one week studying the call graph of both Magento 2 and 1, manually doing the debugging of slow elements, each of which was always invalidated from the Magento 2 cache or never got there at all.

Magento is a very slim, awesome, and at the same time complex system; even in the warm production mode, it is enough to cache five or six Magento 2 bottlenecks, to reach the level of a responsiveness growth of 15-20%. It’s scary to imagine, but there are companies that keep Magento on a shared hosting with very slow drives and RAM crammed with overloaded and generally unconfigured DBMS. And the rest of the memory is constantly occupied by PHP-FPM messengers requiring up to a gigabyte of memory for an ordinary request at the peak. Thus, it is not a surprise that everything crashes at the drop of a hat – it’s enough to start bypassing the sitemap via a dozen threads. And the Magento 2 speed is extremely low in this situation.

You can profile manually, but any modern project contains hundreds of thousands of files with source code, a complicated system of modules auto-connection, and a very complex inheritance hierarchy system that can be sorted out only in a year and if you use a full-fledged IDE. It is an unbearably long, very monotonous, and extremely low-productive job to do everything manually. But there is a better way to achieve better ecommerce performance.

The Fun Begins Here

A few years ago, we developed a small script to analyze the infected code of ≈100 sites on WP / Joomla / Drupal. It parsed each source, built an abstract syntax tree, analyzed the changes in each file with all versions of the snapshot file system, decoded the obfuscated code, and provided hypothesis. After the first pass, the operator had to answer ≈200 controversial points by simply looking at the standard diff in the console. After the second pass, tens of millions of infected files were cured, catalogs rights were automatically corrected, and the problems were solved.

If a team of ten developers had to open each source code manually and read uniquely obfuscated code naturally, it would take at least a week of constant work to do everything. You must admit that this work would be absolutely useless. But the script helped a lot. During a long period of time, it was discovering new bookmarks; and after deleting them, was sending a report from the query log pointing to calls and arguments that led to infection.

When we were doing the profiling of Magento 2.1, we manually scanned dozens gigabytes of profiles (we got tired after the first hundred), soon it became obvious that even in production mode and with warm cache Magento still has resources to be accelerated. We cached only five Magento 2 bottlenecks and received the 18% increase in responsiveness as well as the load decreased by one third.

Now, we combine automated load testing, profiles disassembly, and analysis of problem areas in terms caching security; the same dumb PHP cache can be used for any PHP application: each PHP source is analysed and parsed, its unique imprint and imprint for each block are compiled, an abstract syntax tree is constructed, the indexed code is associated with the profiler output, hypotheses are automatically built from the database, and problem points are indicated taking the scope into account. It’s a pity that PHP has a dynamic typing; in c / c++, for instance, everything is less complicated when it comes to signatures reading, but it is possible to build hypotheses from the body of functions and methods. Once this software was applied to the shell utility that puts Magento into production mode, we increased Magento 2 performance thrice and significantly reduced memory consumption.

The market offers lots of modules for speeding up both PHP and all possible caching systems, but it lacks Magento modules that safely cache the often-called heavy (and deadly heavy for shared hosting) functions that every time return the same content.

If the dumb PHP cache gives at least 20% to the speed of response or will reduce the load on the system just as much – it’s very good. But we are much more ambitious: our goal is to create a universal module that transparently determines slow and dangerous areas on the fly without additional overhead for production applications, which becomes catastrophically terrible with the connected profiler, and allows using Magento even on shared hosting. PHP performance is extremely important and our aim is to improve it with this project.